March had been quite an interesting month and with so much going on it felt right to pop it all down on a page and get peoples thoughts on it. We are going to start with some website issues we have been involved with and then move onto the development of our new service line and tool evaluation.

So what’s been going on in the world of websites and why is that relevant to this blog? Well lets give you a bit of a back story to bring you up to speed. As part of my personal development I have always wanted to understand website design so having done rudimentary coding in PASCAL in the 1980’s as part of my A Level Computer Studies, I have always felt confident “giving it a go”. Now don’t get me wrong as a man in business myself I acknowledge the need for skilled people doing skilled jobs and if I ever needed a highly technical website I would send it out to the big boys to do but at present my website is a simple market place and I have also developed one for a local motorcycle club I am a member of. So where is this going I hear you ask? Well a couple of things have happened this month that spin into ITSM and are typical of what can be found in small businesses.

Firstly the club website showed a drop in traffic. We use Google Analytics to monitor visits and this week a single day showed no traffic from midnight to 12:00. So a review of the normal pattern of visits showed this to be exceptional so the support brain kicked in and a ticket was logged with the hosting company. In all fairness the service was very good and within 3 hours I had a reply saying that they could not see any server or network issues on my hosting instance and advised I contact Google to see if they had any issues with analytics collecting on that day. A few points from this. Firstly, I can’t validate my hosting companies reply as I have no monitoring tools on the site (something I am now exploring) but a check with some users indicated that they had been able to get on the website so that validated what they were saying. Secondly they had no reason not to disclose an issue. The hosting package has a declared uptime (which would have been broken with a 12 hours outage) but with no detrimental service credits to be applied they were not at risk of any significant penalty. The point of reflection was that the supplier in getting back to me (timeliness and detail) gave me confidence and reassurance but also the fact that I was able to initiate this quickly because I had the service contact details to hand proved that in the event of a more significant issue I could mobilise quickly and the supplier would respond.

My second journey into the world of website ITSM came from another local motorcycle club who were having different issues with their website. They had commissioned a rewrite but unfortunately did not have the contact information or authority to deal with the outgoing domains hosting company. This left them in a state of limbo as the established website could not be updated or taken down but held out of date information and was the primary website being ranked by Google. In this case a track back via Whois allowed us to identify the hosting company and the nominated domain holder and through an open engagement we are aiming to move things forward a bit.

So back to the first club website (the one I maintain) and off in a new direction. You see their is limit to self taught skill. It involves books and the internet and generally takes time when you want to do something different or have an issue. That’s OK when nothing is critical about the site. We don’t sell anything, its a place where people go to see where the next competition is, pick up the results and read an event report. Add in a couple of pages about venues and rebuild projects and that’s about it. Its built using bootstrap, is mobile friendly and uses Google analytics for some visit stats. The problem was we had a big event coming up where we were hosting a National round and I wanted to put some video on the site. Simple I thought, I have some video, I can use Google to find the code, easy! Nope, not easy. Why not? Well for a start I found 4 different versions of the recommended code and different views on whether the video should be MP4 or MPEG-4. The result was video’s that would work on desktops but not Android, so I tried something else and they would work on desktop and Android but not play on IOS. A nightmare! So was their a workaround? I know, upload to YouTube and use the embedded YouTube code but then during testing (another story), one club member said it would not run on his iPad. Was this a single user issue or a bug with one of the IOS versions I wondered. So what did I do? Well after 3 evenings of frustration I reached out to my network of developers and within a few hours I have the code snippet, the right video format and a link to a conversation tool and after a bit of testing the issue was fixed.

Why is this relevant? Well the final part of this blog moves into a service offering we have been are developing. Each of these website issues (and in fact all of the tickets for our own business) have been logged on an ITSM tool. Within that we have a basic service overview, information relating to support contracts (e.g. the hosting companies email address / support number and a copy of the contract). When we had the problem with the analytics saying the site may have been down it was easy to get straight onto them as the information was available and a single point of contact managed the issue. Now in our case, none of these issues were critical, or impacted trade, profit or customer service but by managing them through an incident lifecycle we were able to control them, get good visibility of where we were and hopefully prevent them happening again.

Just imagine that in your own small business? A single support desk where you can log your issues and they take ownership of the problem, manage the supplier, keep you updated with the progress and report on how offen these things happen.

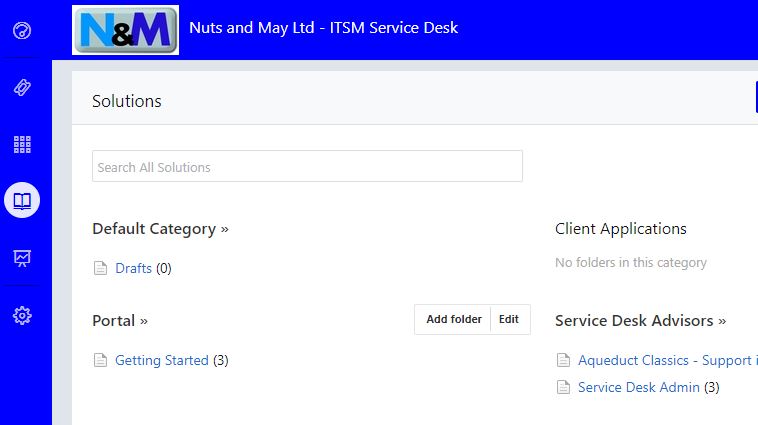

As part of this process we have been assessing two ITSM tools which based on the needs of the organisation, keep the costs down and outside of incident management (as outlined above) allow us to develop other offerings such as controlling system changes and releases and collating your repeating issues into problem statements and then driving out service improvements to remove them.

In order to refine this we are actively trying to identify two small businesses to assist us. We are specifically looking for the following:

1) A small business of between 10 – 50 IT dependent users. Ideally these will be spread over up to 3 sites (but they may be remote based users) and a range of 2 – 5 applications

2) An independent software developer who is selling their product via online market places such as Google Play who would benefit from a single point of contact for their users

So what is the deal? Well from us you get the following:

- Set up of the Service Desk and all associated information to map your services into a support model

- 3 months of Mon – Fri (09:00 – 17:00) Service Desk Incident Management

- Calls logged via email or dedicated portal

- Incidents managed to a target 4 hour response

- Weekly service reporting

As this is an opportunity for us to develop our service we are working to the following pricing model

- 3 month commitment from both parties. No lock in after that point.

- Clear banded pricing model starting at 0 – 50 incidents per week

- Significantly discounted against our expected price to market as a thank you for assisting in us developing our offering

If you have enjoyed reading this article and would like to discuss it in more detail or you would like to know more about our service offering, we would welcome your thoughts on this.